资料汇总

本篇主要内容

本篇主要聚焦于与开发过程中相关的一些其他的软件项目

Embassy

1. Task arena

from: https://docs.embassy.dev/embassy-executor/git/cortex-m/index.html

1.1 Attention: we set the arena size to the sum of sizes of all tasks.

When the nightly Cargo feature is not enabled, embassy-executor allocates tasks out of an arena (a very simple bump allocator).

If the task arena gets full, the program will panic at runtime. To guarantee this doesn’t happen, you must set the size to the sum of sizes of all tasks.

1.2 Attention: we can set arena size in two ways

The arena size can be configured in two ways:

- Via Cargo features: enable a Cargo feature like

task-arena-size-8192. Only a selection of values is available, see Task Area Sizes for reference. - Via environment variables at build time: set the variable named

EMBASSY_EXECUTOR_TASK_ARENA_SIZE. For exampleEMBASSY_EXECUTOR_TASK_ARENA_SIZE=4321 cargo build. You can also set them in the[env]section of.cargo/config.toml. Any value can be set, unlike with Cargo features.

Environment variables take precedence over Cargo features. If two Cargo features are enabled for the same setting with different values, compilation fails.

2. Executor

from: https://docs.embassy.dev/embassy-executor/git/cortex-m/index.html#executor

2.1 Attention the executor-interrupt particularly in cortex-M

executor-thread— Enable the thread-mode executor (using WFE/SEV in Cortex-M, WFI in other embedded archs)executor-interrupt— Enable the interrupt-mode executor (available in Cortex-M only)

3. Attribute Macros

from: https://docs.embassy.dev/embassy-executor/git/cortex-m/index.html#attributes

3.1 Attention: we can spawn multiple task from the same function by setting the pool_size

-

Creates a new

executorinstance and declares an application entry point for Cortex-M spawning the corresponding function body as an async task. -

Declares an async task that can be run by

embassy-executor. The optionalpool_sizeparameter can be used to specify how many concurrent tasks can be spawned (default is 1) for the function.

4. what is const generic and how we use?

https://www.awwsmm.com/blog/what-are-const-generics-and-how-are-they-used-in-rust

https://doc.rust-lang.org/reference/items/generics.html#const-generics

#![allow(unused)] fn main() { // Examples where const generic parameters can be used. // Used in the signature of the item itself. fn foo<const N: usize>(arr: [i32; N]) { // Used as a type within a function body. let x: [i32; N]; // Used as an expression. println!("{}", N * 2); } // Used as a field of a struct. struct Foo<const N: usize>([i32; N]); impl<const N: usize> Foo<N> { // Used as an associated constant. const CONST: usize = N * 4; } trait Trait { type Output; } impl<const N: usize> Trait for Foo<N> { // Used as an associated type. type Output = [i32; N]; } }

5.additional part for previous blog:

5.1 note the type, don’t be messed up

from embassy-executor/src/raw/mod.rs:189

#![allow(unused)] fn main() { impl<F: Future + 'static> AvailableTask<F> { /// Try to claim a [`TaskStorage`]. /// /// This function returns `None` if a task has already been spawned and has not finished running. pub fn claim(task: &'static TaskStorage<F>) -> Option<Self> { task.raw.state.spawn().then(|| Self { task }) } fn initialize_impl<S>(self, future: impl FnOnce() -> F) -> SpawnToken<S> { unsafe { self.task.raw.poll_fn.set(Some(TaskStorage::<F>::poll)); self.task.future.write_in_place(future); let task = TaskRef::new(self.task); SpawnToken::new(task) } } /// Initialize the [`TaskStorage`] to run the given future. pub fn initialize(self, future: impl FnOnce() -> F) -> SpawnToken<F> { self.initialize_impl::<F>(future) } ... } }

Note the parameter future is not what we thought of future. In fact it’s return is what future in rust is, which is generic type F who implement the Future trait.

And so we found that self.task.future.write_in_place(future); , who’s write_in_place function is critical:

from embassy-executor/src/raw/util.rs:5

#![allow(unused)] fn main() { pub(crate) struct UninitCell<T>(MaybeUninit<UnsafeCell<T>>); impl<T> UninitCell<T> { pub const fn uninit() -> Self { Self(MaybeUninit::uninit()) } pub unsafe fn as_mut_ptr(&self) -> *mut T { (*self.0.as_ptr()).get() } #[allow(clippy::mut_from_ref)] pub unsafe fn as_mut(&self) -> &mut T { &mut *self.as_mut_ptr() } #[inline(never)] pub unsafe fn write_in_place(&self, func: impl FnOnce() -> T) { ptr::write(self.as_mut_ptr(), func()) } pub unsafe fn drop_in_place(&self) { ptr::drop_in_place(self.as_mut_ptr()) } } }

note that what the write_in_place does is just call the func(in our example is the future parameter who implement the FnOnce() → T trait). So actually we write the return future which truly implement Future trait into the UninitCell, which in our example is future member of TaskStorage:

from embassy-executor/src/raw/mod.rs:104

#![allow(unused)] fn main() { #[repr(C)] pub struct TaskStorage<F: Future + 'static> { raw: TaskHeader, future: UninitCell<F>, // Valid if STATE_SPAWNED } }

6. Embassy Book

cortex-m pac

1. Interrupt Vector Table

from: https://github.com/rust-embedded/cortex-m?tab=readme-ov-file

cortex-m-rt: Startup code and interrupt handling

2. the Attributes:

from: https://docs.rs/cortex-m-rt/latest/cortex_m_rt/

2.1 Intro

This crate also provides the following attributes:

#[entry]to declare the entry point of the program#[exception]to override an exception handler. If not overridden all exception handlers default to an infinite loop.#[pre_init]to run code beforestaticvariables are initialized

This crate also implements a related attribute called #[interrupt], which allows you to define interrupt handlers. However, since which interrupts are available depends on the microcontroller in use, this attribute should be re-exported and used from a device crate.

The documentation for these attributes can be found in the Attribute Macros section.

2.2 the Attribute Macros

entry: Attribute to declare the entry point of the program

exception: Attribute to declare an exception handler

interrupt: Attribute to declare an interrupt (AKA device-specific exception) handler

pre_init: Attribute to mark which function will be called at the beginning of the reset handler.

2.3 Attribute Macro cortex_m_rt::exception

Syntax

#![allow(unused)] fn main() { #[exception] fn SysTick() { // .. } }

where the name of the function must be one of:

DefaultHandlerNonMaskableIntHardFaultMemoryManagement(a)BusFault(a)UsageFault(a)SecureFault(b)SVCallDebugMonitor(a)PendSVSysTick

(a) Not available on Cortex-M0 variants (thumbv6m-none-eabi)

(b) Only available on ARMv8-M

Usage

#[exception] unsafe fn HardFault(.. sets the hard fault handler. The handler must have signature unsafe fn(&ExceptionFrame) -> !. This handler is not allowed to return as that can cause undefined behavior.

#[exception] unsafe fn DefaultHandler(.. sets the default handler. All exceptions which have not been assigned a handler will be serviced by this handler. This handler must have signature unsafe fn(irqn: i16) [-> !]. irqn is the IRQ number (See CMSIS); irqn will be a negative number when the handler is servicing a core exception; irqn will be a positive number when the handler is servicing a device specific exception (interrupt).

#[exception] fn Name(.. overrides the default handler for the exception with the given Name. These handlers must have signature [unsafe] fn() [-> !]. When overriding these other exception it’s possible to add state to them by declaring static mut variables at the beginning of the body of the function. These variables will be safe to access from the function body.

Drone RTOS

Some reference about the research of RTOS in rust:https://arewertosyet.com/

we need two repo, the file directory is:

├── drone

│ ├── CHANGELOG.md

│ ├── Cargo.lock

│ ├── Cargo.toml

│ ├── LICENSE-APACHE

│ ├── LICENSE-MIT

│ ├── README.md

│ ├── config

│ ├── flake.lock

│ ├── flake.nix

│ ├── openocd

│ ├── project-templates

│ ├── rustfmt.toml

│ ├── src

│ ├── stream

│ └── templates

└── drone-core

├── CHANGELOG.md

├── Cargo.toml

├── LICENSE-APACHE

├── LICENSE-MIT

├── README.md

├── flake.lock

├── flake.nix

├── macros

├── macros-core

├── rustfmt.toml

├── src

└── tests

https://github.com/drone-os/drone

https://github.com/drone-os/drone-core

we reference from the drone book:https://book.drone-os.com/introduction.html

1.Memory Allocation

Dynamic memory is crucial for Drone operation. Objectives like real-time characteristics, high concurrency, small code size, fast execution have led to Memory Pools design of the heap. All operations are lock-free and have O(1) time complexity, which means they are deterministic.

The continuous memory region for the heap is split into pools. A pool is further split into fixed-sized blocks that hold actual allocations. A pool is defined by its block-size and the number of blocks. The pools configuration should be defined in the compile-time. A drawback of this approach is that memory pools may need to be tuned for the application.

Using empiric values for the memory pools layout may lead to undesired memory fragmentation. Eventually the layout will need to be tuned for the application. Drone can capture allocation statistics from the real target device at the run-time and generate an optimized memory layout for this specific application. Ideally this will result in zero fragmentation.

The actual steps are platform-specific. Refer to the platform crate documentation for instructions.

1.1 we need the platform module and stream module

To adapt our structure, I decide to add it to helper directory, the whole structure like this:

├── helper

│ ├── linked_list.rs

│ ├── macros.rs

│ ├── mod.rs

│ ├── runtime.rs

│ └── soft_atomic.rs

and to use stream, I have to import it’s dependency—drone_stream directly into the mod.rs

finally our project like this(I omit the other parts that not belonged to our adapt of Drone):

├── heap

│ ├── mod.rs

│ ├── pool.rs

│ └── trace.rs

├── helper

│ ├── linked_list.rs

│ ├── macros.rs

│ ├── mod.rs

│ ├── runtime.rs

│ └── soft_atomic.rs

├── lang_items.rs

├── lib.rs

├── platform

│ ├── interrputs.rs

│ └── mod.rs

1.2 with heap we can do more thing like smart pointer

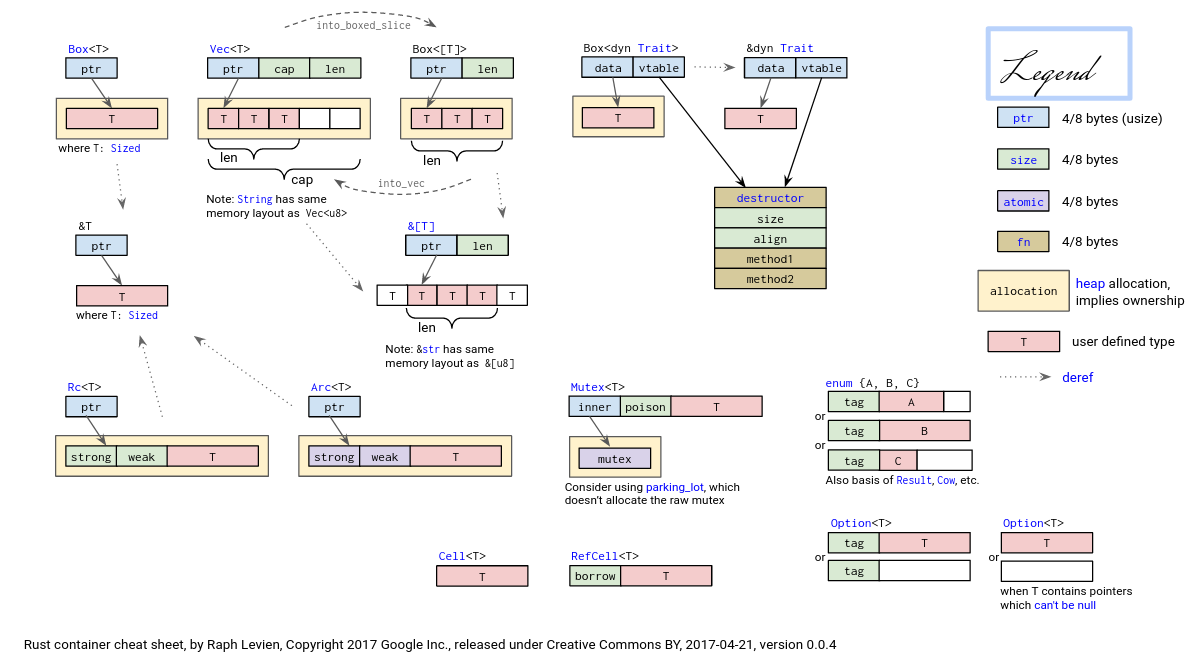

the mind map of the smart pointer’s memory allocation

1.3 problem: we need heap! macro

according to the api doc:

https://api.drone-os.com/drone-core/0.14/drone_core/heap/index.html#usage

#![allow(unused)] fn main() { use drone_core::heap; // Define a concrete heap type with the layout defined in the Drone.toml heap! { // Heap configuration key in `Drone.toml`. config => main; /// The main heap allocator generated from the `Drone.toml`. metadata => pub Heap; // Use this heap as the global allocator. global => true; // Uncomment the following line to enable heap tracing feature: // trace_port => 31; } // Create a static instance of the heap type and declare it as the global // allocator. /// The global allocator. #[global_allocator] pub static HEAP: Heap = Heap::new(); }

we need to use the macro heap, which is a proc-macro of rust, we have to know it’s grammar:

you should know workspace:

As the macro in drone is so poor in designing, I give up port it to my project. So I try to construct a global allocator myself.

there is my reference:

Heap Allocation | Writing an OS in Rust

Allocator Designs | Writing an OS in Rust

When I implementing the allocator above, as it discussed, we also need linked list to optimize our design:

Allocator Designs | Writing an OS in Rust

I leave the tutorial’s linked list example, but still use the linked_list_allocator crate because of the merge of freed blocks.